Nanomaterials are being developed for an array of applications including energy, manufacturing, waste treatment, consumer products, and medicine. The proliferation of nanotechnology means that nanomaterials will inevitably come into contact with humans and environment. Some of this contact can be beneficial, as in the case of nanomedicine or environmental cleanup; however, it can also produce adverse or unintended outcomes. Understanding the health and environmental impact of nanomaterials, and developing strategies to mitigate adverse effects is vital to the sustainable and responsible development of nanotechnology.

Currently, small animal models are the ‘gold standard’ for nanomaterial toxicity testing. In a typical assessment, researchers introduce a nanomaterial into a series of laboratory animals, generally rats or mice, or the 'workhorse' of toxicity testing – zebrafish (see: "High content screening of zebrafish greatly speeds up nanoparticle hazard assessment"). They then examine where the material accumulates, whether it is excreted or retained in the animal, and the effect it has on tissue and organ function. A detailed understanding often requires dozens of animals and can take many months to complete for a single formulation. The current infrastructure and funding for animal testing is insufficient to support the evaluation of all nanomaterials currently in existence, let alone those that will be developed in the near future. This is creating a growing deficit in our understanding of nanomaterial toxicity, which fuels public apprehension towards nanotechnology.

Dr. André Nel and his coworkers at the California NanoSystems Institute (CNSI) and the University of California Los Angeles (UCLA) are taking a fundamentally different approach to nanomaterial toxicity testing.

Nel believes that, under the right circumstances, resource-intensive animal experiments can be replaced with comparatively simple in vitro assays. The in vitro assays are not only less costly, but they can also be performed using high throughput (HT) techniques. By using an in vitro HT screening approach, comprehensive toxicological testing of a nanomaterial can be performed in a matter of days. Rapid information gathering will allow stakeholders to make rational, informed decisions about nanomaterials during all phases of the development process, from design to deployment.

Over the years, researchers have attempted to use in vitro assessment of nanomaterial toxicity to predict in vivo outcomes. However, the overall correlations have been poor. One of the most common practices is to use the toxicity of nanomaterials in isolated cell cultures as a predictor of toxicity in similar cell types in the body. Nel believes that such comparisons often fail because they are too simplistic and do not adequately reproduce the complexity of the body.

"Originally, there was not enough consideration of what approaches were chosen to assess toxicity in vitro. A cell is not an animal. An intact organ has a lot more complexity than a cell" says Nel. "If you try to make direct comparisons without considering the specific biological basis for performing the testing, you’re going to make mistakes. An in vivo injury response like inflammation depends on many different responses in organs or tissues the material targets. For example, a nanomaterial may produce oxidative stress within epithelial cells and macrophages. Cellular oxidative stress can initiate cytokine and chemokine production, which at the tissue level can lead to a cascade of events that lead to organ inflammation and even systemic effects. If you screen for cell death as your in vitro assay, you may miss out entirely on the axis of non-lethal cellular responses (such as cytokine production) and that may connect you to tissue inflammation at the intact animal level. Thus, the in vitro test may not correlate with your animal test, meaning that you cannot make appropriate inferences from the cell death assay. The situation could be different, however, if you chose to study the connection between cellular and tissue inflammation, and will become even stronger if you can connect both in vitro and in vivo responses to nanomaterial properties that lead to oxidative stress."

Rather than using in vitro systems as direct substitutes for the in vivo case, Nel is using a mechanistic approach to connect cellular responses to more complex biological responses, attempting to employ mechanisms that are engaged at both levels and reflective of specific nanomaterial properties.

"You need to align what you test at a cellular level with what you want to know at the in vivo" says Nel. "If oxidative stress at the cellular level is a key initiating element, then by screening for this outcome in cells you more are likely to yield something more predictive of the in vivo outcome. We can do a lot of our mechanistic work at an implementation level that allows development of predictive screening assays."

By measuring many relevant mechanistic responses, and integrating the results, Nel believes that the in vivo behavior of a nanomaterial can be accurately predicted, provided that enough thinking goes into the devising the systems biology approach to safety assessment. Nel outlined his vision for nanomaterial toxicity testing, along with his group’s work on its implementation in an article published on June 7, 2012 in Accounts of Chemical Research ("Nanomaterial Toxicity Testing in the 21st Century: Use of a Predictive Toxicological Approach and High-Throughput Screening"). This work is supported by funding from the NSF, EPA,UC Center for the Environmental Implications of Nanotechnology,NIEHS, and the UCLA Center for Nanobiology and Predictive Toxicology.

On top of speeding up nanomaterial toxicity testing, Nel’s mechanistic screening strategy will also lower the burden on laboratory animals. Animal testing is increasingly viewed as a unethical practice by the public, leaving researchers and regulators searching for alternatives. In Nel’s paradigm, toxicity testing in animals is used sparingly but critically to confirm the in vitro predictions. In addition, by leveraging in vivo results to improve the predictive power of the models, the predictive HTS paradigm makes more rational use of the animal tissues because you know what to look for. While Nel is hopeful that his approach to toxicity testing may eventually eliminate animal testing altogether, he warms that this is not yet a reality.

"Some regulators foresee phasing out animal experiments in toxicology. I don’t think we’re at that stage yet. We still need in vivo observations, but need to use them sparingly. If we do the job well enough, we may arrive at a point in the future where we can eliminate animal use in toxicology research."

Nel’s approach will influence not only the way in which nanomaterial toxicity is assessed, but also the way in which nanomaterials are developed. Currently, nanomaterials are designed to meet the need of a particular application. Toxicity is then evaluated retrospectively. Formulations that exhibit unacceptable toxicity at that point may be abandoned after a significant investment in development. Because Nel’s approach generates toxicity information much faster than traditional techniques, it will be possible to integrate toxicity during the design of a new nanomaterial. The proactive characterization of nanomaterial toxicity will provide feedback during the design process, producing formulations that maximize efficacy and minimize risk.

Nel credits the emergence of systems biology as a key factor enabling the development of a mechanistic in vitro approach to nanomaterial toxicity testing. In the pre-genomic era, the interworking of biological systems was largely unknown, making it difficult to understand how a nanomaterial would perturb them.

"The systems biology approach involves taking an event at the reductionist level and correlating to the behavior of an intact system," explains Nel. "It is an emerging area of science. Large-scale knowledge generation began about 20 years ago. Only more recently, with the advent of bioinformatics, has reduction and translation of that huge volume of data into practical outcomes begun to occur. This has lead to distillation of the information into clinically-useful elements that you can use in research. These developments have been the enabling factor for the emergence of the mechanistic approach to toxicity testing."

Nel explains that, in the past, toxicity was assessed using a descriptive approach. In other words, one could only study the effects, without understanding the causes.

"The descriptive approach means that we analyze and assess the effects of a potentially hazardous substance. You use a single material that is administered at many different doses to many different animals. Then you do a series of descriptive measurements of the results. Does the animal lose weight? Was there lethality? Did the animals show differences in grooming or health? What are the biochemical effects in terms of analyzing blood? You end up with an analysis that does not tell you why the material caused the effect. It is limiting in the number of materials you can use and it is resource intensive. If you identify a hazard, it could take a long time to understand what is causing it."

Nel uses the example of silicosis suffered by mine workers in response to inhaled quartz fibers to illustrate the differences between the descriptive and mechanistic approaches.

"Studies in small animals revealed the lung damaging potential of quartz. Yet, after decades of laboratory research, we still do not have a good understanding of what it is about quartz that is hazardous. Not all forms of silica are hazardous. If you understand the mechanism of toxicity, you can use that mechanism to gather information about a large number of materials in a rapid fashion. You can then use animal experiments sparingly to show that the mechanism correlates with a disease outcome or an injury outcome that is premised on the same mechanism. This will enable a much more rational approach to the assessment of a toxicant."

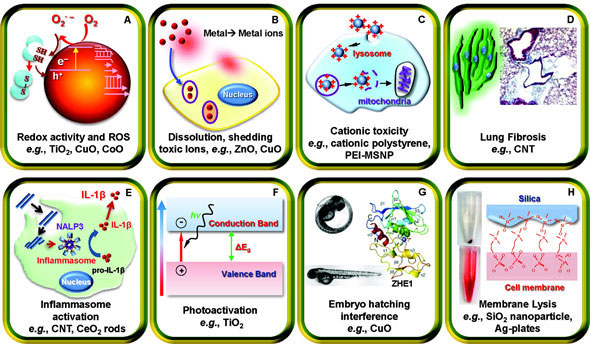

A significant challenge for Nel and his team has been accounting for the plethora of different toxicity mechanisms that a nanomaterial can engage in. So far, Nel has taken an iterative approach to the problem. He begins by using those pathways that have been identified in the literature, and adds more mechanisms as they are discovered during the course of his studies. So far, the major mechanisms he has discovered include redox activity and reactive-oxygen-species generation, dissolution and shedding of toxic ions, cation toxicity, lung fibrosis, inflammasome activation, photoactivation, fish embryo hatching interference, and membrane lysis. Nel believes that these represent only a subset of all the possible mechanisms that are active in the body. As more mechanisms are uncovered, the predictive power of Nel’s strategy will increase.

Currently, small animal models are the ‘gold standard’ for nanomaterial toxicity testing. In a typical assessment, researchers introduce a nanomaterial into a series of laboratory animals, generally rats or mice, or the 'workhorse' of toxicity testing – zebrafish (see: "High content screening of zebrafish greatly speeds up nanoparticle hazard assessment"). They then examine where the material accumulates, whether it is excreted or retained in the animal, and the effect it has on tissue and organ function. A detailed understanding often requires dozens of animals and can take many months to complete for a single formulation. The current infrastructure and funding for animal testing is insufficient to support the evaluation of all nanomaterials currently in existence, let alone those that will be developed in the near future. This is creating a growing deficit in our understanding of nanomaterial toxicity, which fuels public apprehension towards nanotechnology.

Dr. André Nel and his coworkers at the California NanoSystems Institute (CNSI) and the University of California Los Angeles (UCLA) are taking a fundamentally different approach to nanomaterial toxicity testing.

Nel believes that, under the right circumstances, resource-intensive animal experiments can be replaced with comparatively simple in vitro assays. The in vitro assays are not only less costly, but they can also be performed using high throughput (HT) techniques. By using an in vitro HT screening approach, comprehensive toxicological testing of a nanomaterial can be performed in a matter of days. Rapid information gathering will allow stakeholders to make rational, informed decisions about nanomaterials during all phases of the development process, from design to deployment.

Over the years, researchers have attempted to use in vitro assessment of nanomaterial toxicity to predict in vivo outcomes. However, the overall correlations have been poor. One of the most common practices is to use the toxicity of nanomaterials in isolated cell cultures as a predictor of toxicity in similar cell types in the body. Nel believes that such comparisons often fail because they are too simplistic and do not adequately reproduce the complexity of the body.

"Originally, there was not enough consideration of what approaches were chosen to assess toxicity in vitro. A cell is not an animal. An intact organ has a lot more complexity than a cell" says Nel. "If you try to make direct comparisons without considering the specific biological basis for performing the testing, you’re going to make mistakes. An in vivo injury response like inflammation depends on many different responses in organs or tissues the material targets. For example, a nanomaterial may produce oxidative stress within epithelial cells and macrophages. Cellular oxidative stress can initiate cytokine and chemokine production, which at the tissue level can lead to a cascade of events that lead to organ inflammation and even systemic effects. If you screen for cell death as your in vitro assay, you may miss out entirely on the axis of non-lethal cellular responses (such as cytokine production) and that may connect you to tissue inflammation at the intact animal level. Thus, the in vitro test may not correlate with your animal test, meaning that you cannot make appropriate inferences from the cell death assay. The situation could be different, however, if you chose to study the connection between cellular and tissue inflammation, and will become even stronger if you can connect both in vitro and in vivo responses to nanomaterial properties that lead to oxidative stress."

Rather than using in vitro systems as direct substitutes for the in vivo case, Nel is using a mechanistic approach to connect cellular responses to more complex biological responses, attempting to employ mechanisms that are engaged at both levels and reflective of specific nanomaterial properties.

"You need to align what you test at a cellular level with what you want to know at the in vivo" says Nel. "If oxidative stress at the cellular level is a key initiating element, then by screening for this outcome in cells you more are likely to yield something more predictive of the in vivo outcome. We can do a lot of our mechanistic work at an implementation level that allows development of predictive screening assays."

By measuring many relevant mechanistic responses, and integrating the results, Nel believes that the in vivo behavior of a nanomaterial can be accurately predicted, provided that enough thinking goes into the devising the systems biology approach to safety assessment. Nel outlined his vision for nanomaterial toxicity testing, along with his group’s work on its implementation in an article published on June 7, 2012 in Accounts of Chemical Research ("Nanomaterial Toxicity Testing in the 21st Century: Use of a Predictive Toxicological Approach and High-Throughput Screening"). This work is supported by funding from the NSF, EPA,UC Center for the Environmental Implications of Nanotechnology,NIEHS, and the UCLA Center for Nanobiology and Predictive Toxicology.

On top of speeding up nanomaterial toxicity testing, Nel’s mechanistic screening strategy will also lower the burden on laboratory animals. Animal testing is increasingly viewed as a unethical practice by the public, leaving researchers and regulators searching for alternatives. In Nel’s paradigm, toxicity testing in animals is used sparingly but critically to confirm the in vitro predictions. In addition, by leveraging in vivo results to improve the predictive power of the models, the predictive HTS paradigm makes more rational use of the animal tissues because you know what to look for. While Nel is hopeful that his approach to toxicity testing may eventually eliminate animal testing altogether, he warms that this is not yet a reality.

"Some regulators foresee phasing out animal experiments in toxicology. I don’t think we’re at that stage yet. We still need in vivo observations, but need to use them sparingly. If we do the job well enough, we may arrive at a point in the future where we can eliminate animal use in toxicology research."

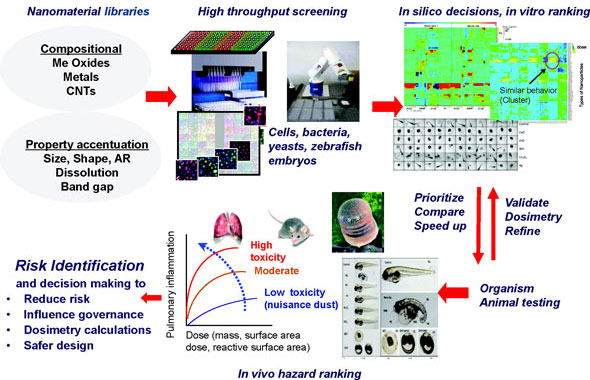

|

| Overview of the predictive toxicity frame framework. High throughput screening of nanomaterial hazards along with in silico decision making procedures speed up risk identification and the regulatory response. Please click here to enlarge the image. (Reprinted with permission from American Chemical Society) |

Nel’s approach will influence not only the way in which nanomaterial toxicity is assessed, but also the way in which nanomaterials are developed. Currently, nanomaterials are designed to meet the need of a particular application. Toxicity is then evaluated retrospectively. Formulations that exhibit unacceptable toxicity at that point may be abandoned after a significant investment in development. Because Nel’s approach generates toxicity information much faster than traditional techniques, it will be possible to integrate toxicity during the design of a new nanomaterial. The proactive characterization of nanomaterial toxicity will provide feedback during the design process, producing formulations that maximize efficacy and minimize risk.

Nel credits the emergence of systems biology as a key factor enabling the development of a mechanistic in vitro approach to nanomaterial toxicity testing. In the pre-genomic era, the interworking of biological systems was largely unknown, making it difficult to understand how a nanomaterial would perturb them.

"The systems biology approach involves taking an event at the reductionist level and correlating to the behavior of an intact system," explains Nel. "It is an emerging area of science. Large-scale knowledge generation began about 20 years ago. Only more recently, with the advent of bioinformatics, has reduction and translation of that huge volume of data into practical outcomes begun to occur. This has lead to distillation of the information into clinically-useful elements that you can use in research. These developments have been the enabling factor for the emergence of the mechanistic approach to toxicity testing."

Nel explains that, in the past, toxicity was assessed using a descriptive approach. In other words, one could only study the effects, without understanding the causes.

"The descriptive approach means that we analyze and assess the effects of a potentially hazardous substance. You use a single material that is administered at many different doses to many different animals. Then you do a series of descriptive measurements of the results. Does the animal lose weight? Was there lethality? Did the animals show differences in grooming or health? What are the biochemical effects in terms of analyzing blood? You end up with an analysis that does not tell you why the material caused the effect. It is limiting in the number of materials you can use and it is resource intensive. If you identify a hazard, it could take a long time to understand what is causing it."

Nel uses the example of silicosis suffered by mine workers in response to inhaled quartz fibers to illustrate the differences between the descriptive and mechanistic approaches.

"Studies in small animals revealed the lung damaging potential of quartz. Yet, after decades of laboratory research, we still do not have a good understanding of what it is about quartz that is hazardous. Not all forms of silica are hazardous. If you understand the mechanism of toxicity, you can use that mechanism to gather information about a large number of materials in a rapid fashion. You can then use animal experiments sparingly to show that the mechanism correlates with a disease outcome or an injury outcome that is premised on the same mechanism. This will enable a much more rational approach to the assessment of a toxicant."

|

| Major mechanistic nanomaterial injury pathways identified by André Nel and his coworkers. Please click here to enlarge the image. (Reprinted with permission from American Chemical Society) |

Some of the identified toxicity mechanisms may apply to other material types, such as small molecules; however, Nel emphasizes that nanomaterials are a fundamentally unique class of materials with distinct biological interactions.

"Some aspects of nanomaterial toxicity, such as the release of ions, could overlap with known toxicity pathways. But, to approach it from that standpoint without considering the nanoscale dimensions of the materials would be a mistake. Other phenomena can occur at the nanoscale that do not have a direct analogue at the molecular scale. These include adsorption of components to the surface of a nanomaterial either from the external environment or the physiological environment. The nanomaterial can, in this context, act as a carrier to distribute compounds to new areas of the body, where they would not reach by themselves. "

Generalizing nanomaterial toxicity has proven to be a challenge. While specific chemical classes of nanomaterials have a tendency to produce toxicity by a conserved set of mechanisms, Nel has found that in many cases, chemically-similar nanomaterials can produce toxicity by entirely different mechanisms.

"While band gap overlap can predict the ability of metal oxide nanomaterials to induce reactive oxygen species generation and pulmonary damage, some metal oxides, such as ZnO and TiO2 induce damage despite the fact that they do not show a strong overlap. This is likely because they are inducing toxic responses by other mechanisms including dissolution, or photogeneration of reactive oxygen species."

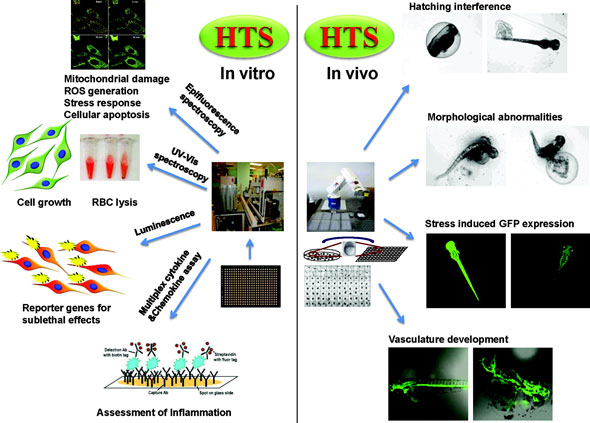

Along with identifying toxicity mechanisms, Nel is also developing in vitro assays to assess them. These assays lie on a spectrum of complexity, ranging from very simple to highly complex. Low complexity methods include biochemical assays such as enzyme inhibition. Medium complexity methods include assays on single living cells. Examples include reactive oxygen species generation, stress response, apoptosis, membrane lysis, and reporter gene expression, which can be detected using high content screening. High complexity methods are based on simplified in vivosystems, including zebrafish embryos. Zebrafish embryos recapitulate many of the biological complexities of small animals, but require significantly fewer resources. Increased complexity comes with a trade-off in terms of throughput and level of automation. Part of Nel’s research is devoted to finding ways to increase the throughput of the more complex, but information-rich assays.

Generally, the results from the in vitro assays do not define an absolute level of toxicity for any particular nanomaterial. Instead, toxicity is defined relative to standardized nanomaterial libraries with well-characterized responses. By comparing the level of toxicity of a new nanomaterial to the reference library, Nel can produce a hazard ranking that shows how toxic a nanomaterial is likely to be, and by which mechanisms. The ranking can then be used to prioritize further testing of those nanomaterials that show the highest probability of toxicity.

|

| Examples of in vitro and simplified in vivo assays used by André Nel and his co-workers to assess nanomaterial toxicity mechanisms. Please click here to enlarge the image. (Reprinted with permission from American Chemical Society) |

Generally, the results from the in vitro assays do not define an absolute level of toxicity for any particular nanomaterial. Instead, toxicity is defined relative to standardized nanomaterial libraries with well-characterized responses. By comparing the level of toxicity of a new nanomaterial to the reference library, Nel can produce a hazard ranking that shows how toxic a nanomaterial is likely to be, and by which mechanisms. The ranking can then be used to prioritize further testing of those nanomaterials that show the highest probability of toxicity.

It is important to recognize that just because a nanomaterial can participate in a toxicity pathway does not mean that it will necessarily produce a pathological outcome in vivo. The pathological outcome depends on the extent to which a nanomaterial activates a particular mechanism. A nanomaterial may, for example, generate reactive oxygen species. However, if the level does not exceed the capability of the endogenous physiological environment to respond, then a pathological outcome may not occur. Nel illustrates this concept by dividing the cellular response to oxidative stress into a multi-tiered hierarchy. Tier 1 occurs with the onset of the cell’s anti-oxidant defense machinery. Tier 2 occurs as the cell’s natural antioxidant defense is overwhelmed, leading to inflammation and the activation of defensive signaling pathways. Tier 3 proceeds under high levels of oxidative stress, and results in cell death either by triggering apoptosis or necrosis. The ultimate outcome of nanomaterial-induced oxidative stress will depend on which tier is activated.

On top of rapidly assessing nanomaterial toxicity, Nel’s mechanistic approach can elucidate which physical and chemical properties of the nanomaterial are responsible for producing a toxic outcome. By screening libraries of well-defined nanomaterials, and using algorithms for data transformation, data analysis, and machine learning, Nel is able to produce ‘quantitative structure-activity relationships’ (QSARs) that describe the biological effect of a nanomaterial as a function of its physicochemical properties. In a recent example, Nel and his colleagues synthesized a series of cerium dioxide nanorods and nanowires that had identical composition, but different aspect ratios and lengths. They found that nanorods above a ‘critical’ length and aspect ratio induced proinflammatory responses, lysosomal rupture, and cytotoxicity, while shorter rods avoided these effects. QSAR relationships such as these can be used as a guide to design new nanomaterials that behave in a predictable and desired way. One of the key benefits of QSARs is that the toxicity of a nanomaterial can be predicted before it is ever synthesized.

Relationships between nanomaterial physicochemistry and toxicity provide two important capabilities. First, one can rationally design nanomaterials from the outset that will avoid toxic outcomes. Second, if a nanomaterial is found to be toxic, the properties that produce toxicity can be isolated, and an appropriate modification can be made to avoid it. Nel calls these ‘safe-by-design’ strategies. So far, he has identified three general ‘safe-by-design’ strategies including covering the nanomaterial with polymers to prevent direct interaction with biomolecules, doping metal or metal oxide nanomaterials to change the electronic structure and prevent dissolution, and passivation of surface defects to prevent the generation of reactive oxygen species. In a recent study, Nel’s team found that by passivating surface defects on silver nanoplates with cysteine, the toxicity due to reactive oxygen species generation could be largely eliminated (see the recent Nanowerk Spotlight: "Surface defects on silver nanoparticles hold dangers for aquatic life").

The challenge in implementing ‘safe-by-design’ strategies is that the properties that make a nanomaterial desirable in a particular application are often the properties that lead to their toxicity.

For example, while electronically-active nanomaterials may be hazardous in a biological setting, this property makes them useful as catalysts and for electronic devices. Nel is making an ongoing effort to find strategies that prevent toxicity while retaining the desirable features of the nanomaterial.

While Nel and his team have made significant developments towards implementing the mechanistic approach to nanotoxicology screening, it is an ongoing endeavour. Nel believes that further development will come from incorporating new approaches to data gathering and processing.

"A mechanistic approach to toxicity assessment includes many different techniques that we’re only starting to make use of. These include proteomic, metabolomic, and genomic approaches for describing phenotypic changes in cells at the biochemical level. In the beginning, we deliberately decided to take injury mechanisms that could be tested in vitro and in vivo, in order to begin knowledge generation. The limitation so far is that we’ve only used mechanisms that we could think of. There are potentially a large number that we don’t know about. In order to get to them, we will have to use new techniques that give us more information. Each of those inter-translations is not going to happen overnight. You will still have to do data reduction and pathway analysis. Now that we’ve shown the feasibility of using the mechanistic approach, we can return to proteomics and genomics to explore domains of knowledge using a much wider platform and implement that in the discovery process."

Proteomic and genomic techniques could also provide information on long-term chronic exposure outcomes including mutagenesis and oncogenesis, for which there is currently little information.

Nel is also searching for ways to incorporate information on fate, transport, and exposure assessment in the mechanistic framework. Understanding where a nanomaterial will locate in the body is vital for predicting where and how it will interact with the biological systems present there.

Moving forward, it remains to be seen how the mechanistic in vitro assessment of toxicity will integrate into the current regulatory framework. The onus will be on the research community to demonstrate convincingly that this approach is capable of providing enough information about nanotoxicology to replace in vivo experiments. While false positives are a concern, they will be comparatively easy to identify. The challenge will be identifying cases where a nanomaterial is predicted to be safe, but is actually toxic. On the bright side, quantitative structure-activity relationships have set a regulatory precedent by using chemical structure to predict the toxicity of some industrial chemicals. There is no doubt that it will take time and resources before the mechanistic in vitro approach to toxicity testing of nanomaterials can adequately replace in vivo experiments. However, the ultimate benefits in terms cost, time, and the lives of animals are powerful motivators.

By Carl Walkey, Integrated Nanotechnology & Biomedical Sciences Laboratory, University of Toronto, Canada.

Fonte: Nanowerk Spotlight

Fonte: Nanowerk Spotlight